Risk-storming

A visual and collaborative risk identification technique

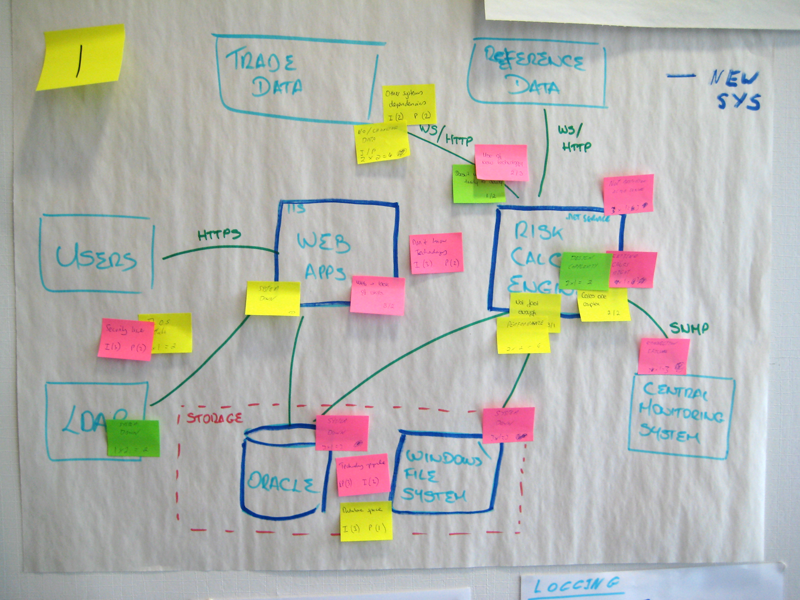

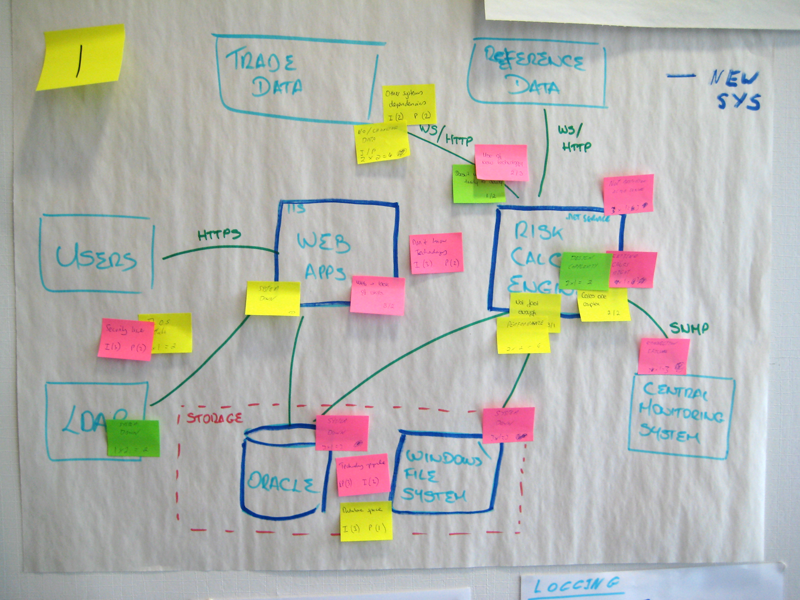

Draw one or more diagrams to show what you're planning to build or change, ideally at different levels of abstraction (e.g. using the C4 model).

Gather people in front of the diagrams, and ask them to identify what they personally perceive to be risky. Write a summary of each risk on a separate sticky note, colour coded to represent low, medium, and high priority risks. Timebox this exercise (e.g. 10 minutes), and do it in silence.

Ask everybody to place their sticky notes onto the diagrams, sticking them in close proximity to the area where the risk has been identified.

Review and summarise the output, especially focussing on risks that only one person identified, or risks where multiple people disgree on the priority.

Background

There is risk inherent with building any piece of software; whether you're building a completely new greenfield project, or adding a new feature to an existing codebase. A risk is a possibility that something bad can happen, there being different types of risks, each with their own potential consequence. Risks come in all shapes and sizes, and can be associated with:

- People: Does the team have the appropriate number of people? Do they have the appropriate skills? Do the team members work well together? Will the leadership roles be undertaken appropriately? Can training or consultants be brought in to help address skill gaps? Will we be able to hire people with the same skills in the future, for maintenance purposes? Does the operations and support team have the appropriate skills to run and look after the software? How likely is it that a key person will leave the team/organisation?

- Process: Does the team understand how they are going to work? Is there a described process? Are there expected outputs and artefacts? Is there enough budget available, both now and in the future? Are there any outside events (e.g. major changes in business, mergers/acquisitions, legal or regulatory changes, etc) that could disrupt progress of the work?

- Technology: Will the proposed architecture be able to satisfy the key quality attributes? Will the technology work as expected? Is the technology stable enough to build upon?

Quantifying and prioritising risks

It's often difficult to prioritise which risks you should take care of and, if you get it wrong, you'll put the risk mitigation effort in the wrong place. For example, a risk related to your software project failing should be treated as higher priority than a risk related to the team encountering some short-term discomfort. How do you quantify each of the risks, and assess their relative priorities then?

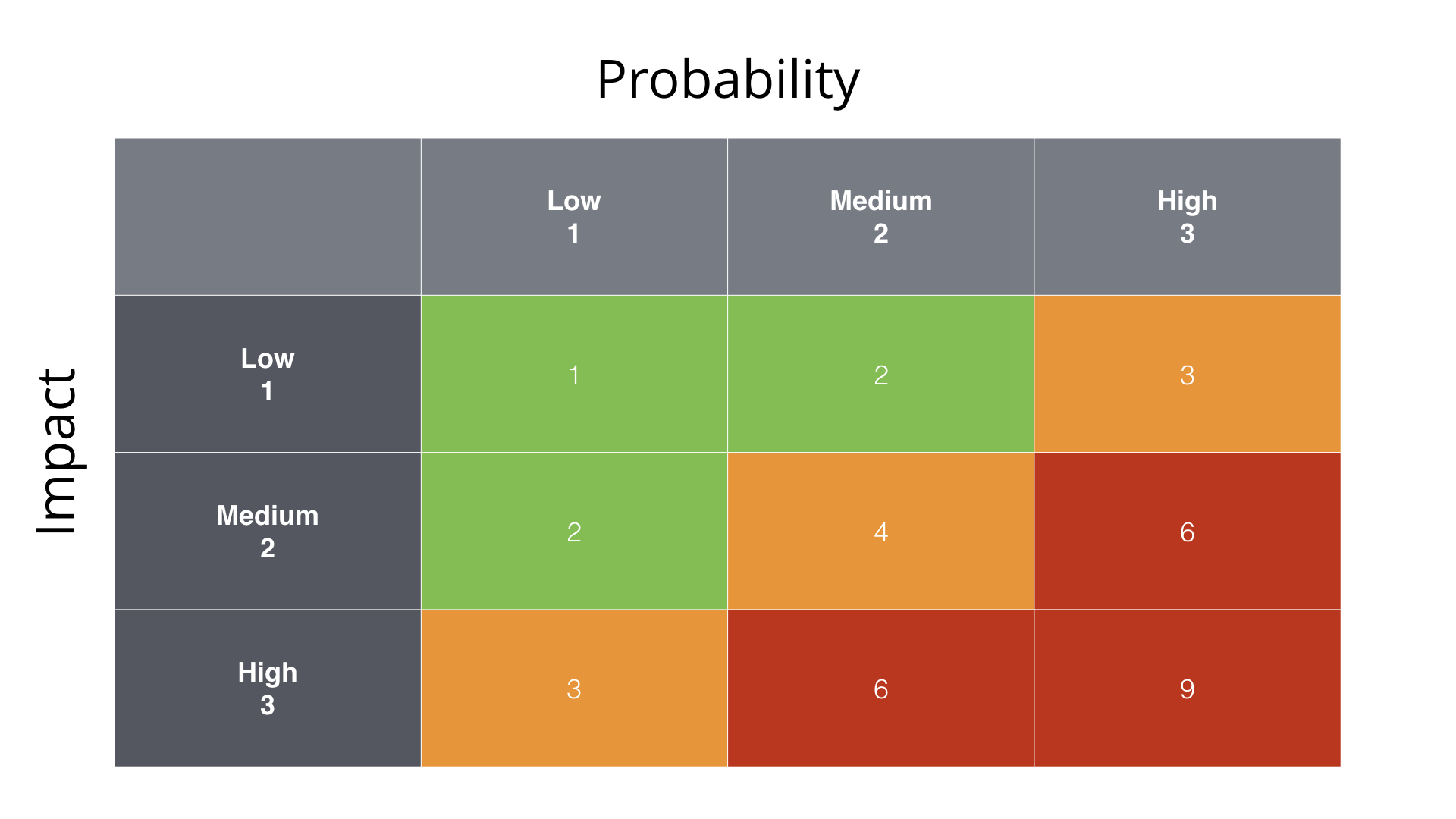

One way to do this is to map each risk onto a matrix, where you evaluate the probability of that risk happening against the negative impact of it happening.

- Probability: How likely is it that the risk will happen?

- Impact: What is the negative impact if the risk does occur?

Both probability and impact could be quantified as low/medium/high or as a numeric value (1-3, 1-5, etc). The following diagram illustrates what a typical risk matrix might look like.

Some examples of the probability and impact include:

- Low probability: The chance of the risk happening is low; we don't think the risk will happen.

- High probability: The chance of the risk happening is high; there's a very real possibility that the risk will happen.

- Low impact: Short-term discomfort for the team (minor rework, extended hours), minor outages, accumulation of additional unwanted technical debt, etc.

- High impact: Project is cancelled, staff fired, major outages, major data loss, loss of public reputation, loss of income, law suits, etc.

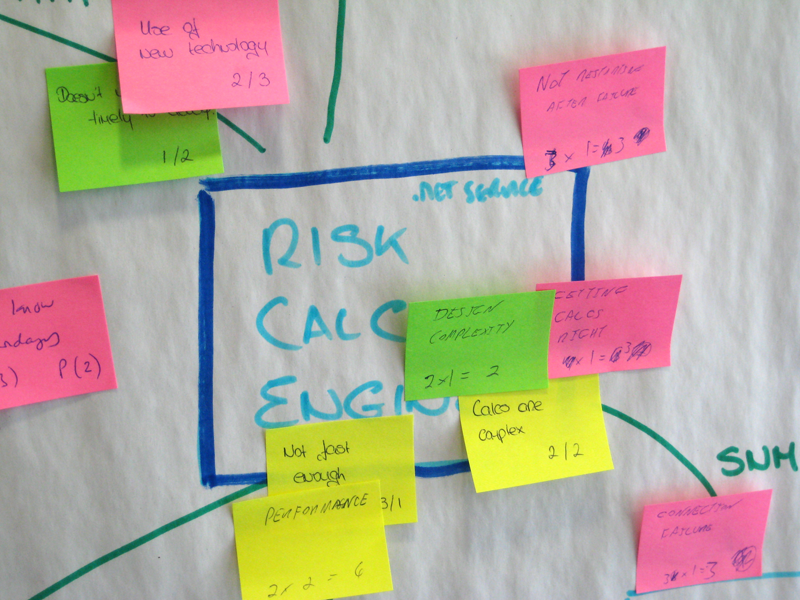

Prioritising risks is then about ranking each risk according to their score, which can be found by multiplying the numbers in the risk matrix together. A risk with a low probability and a low impact can be treated as a low priority risk. Conversely, a risk with a high probability and a high impact needs to be given a high priority. As indicated by the colour coding:

- Red: a score of 6 or 9; the risk is high priority.

- Amber: a score of 3 or 4; the risk is medium priority

- Green: a score of 1 or 2; the risk is low priority.

There are other ways to quantify risks too (e.g. OWASP Risk Rating Methodology) and some organisations use information security classification levels (e.g. public, restricted, confidential, top secret, etc) as the basis for understanding the risk associated with specific pieces of data being exposed by a security breach (e.g. UK Government Security Classifications). You may also have your own formal risk evaluation approaches if you're building health and safety-critical software systems.

Identifying risks with risk-storming

Now that we understand how to quantify risks, we need to step back and look at how to identify them in the first place. One of the worst ways to identify risks is to let a single person do this on their own. Unfortunately this tends to be the norm. The problem with asking a single person to identify risks is that you only get a view based upon their perspective, knowledge and experience. As with software development estimates, an individual's perceptions of risk will be based upon their own experience, and is therefore subjective. For example, if you're planning on using a new technology, the choice of that technology may or may not be identified as a risk depending on whether the individual identifying the risks has used it in the past.

A better approach to risk identification is to make it a collaborative activity, and something that the whole team can participate in. This is essentially what we see with software estimates, when teams use techniques such as Planning Poker or its predecessor, Wideband Delphi. One approach is to run something called a pre-mortem where you imagine that your project has failed, and you discuss/brainstorm the reasons as to why it failed, and ultimately what caused that failure.

Another is "risk-storming"; a quick, fun, collaborative, and visual technique for identifying risk that the whole team (architects, developers, testers, project managers, operational staff, etc) can take part in. There are 4 steps:

1. Draw some software architecture diagrams

The first step is to draw some architecture diagrams on whiteboards or large sheets of flip chart paper. Ideally this should be a collection of diagrams at different levels of abstraction, because each diagram (and therefore level of detail) will allow you to highlight different risks across your architecture. These diagrams should show what you are planning to build, or planning to change. The C4 model for visualising software architecture works well.

2. Identify the risks (individually)

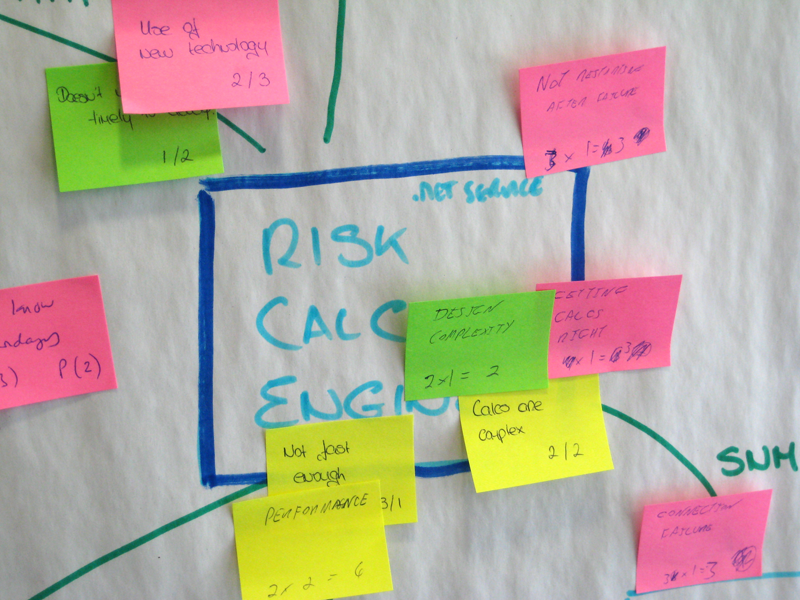

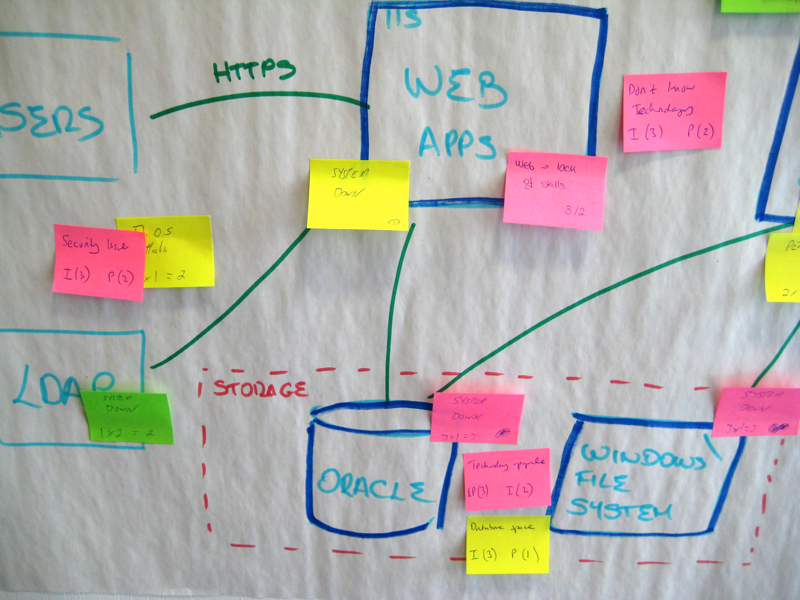

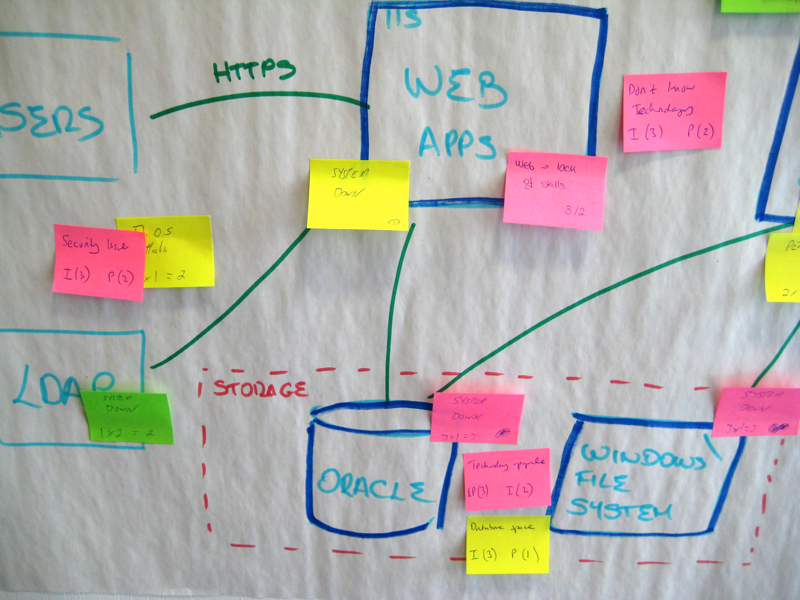

Since risks are subjective, step 2 is about asking everybody to look at the architecture diagrams and to individually (i.e. in silence, without collaboration) write down the risks that they can identify, one per sticky note. Each risk should be quantified according to the probability and impact.

Ideally, use different colour sticky notes to represent the different risk priorities (e.g. pink for high priority risks, yellow for medium, green for low). You can timebox this part of the exercise to (e.g.) 10 minutes, and this step should be done in silence, with everybody keeping their sticky notes hidden from view so they don't influence what other people are thinking.

Here are some examples of the risks to look for:

- Data formats from third-party systems change unexpectedly.

- External systems become unavailable.

- Components run too slowly.

- Components don't scale.

- Key components crash.

- Single points of failure.

- Data becomes corrupted.

- Infrastructure fails.

- Disks fill up.

- New technology doesn't work as expected.

- New technology is too complex to work with.

- The team lacks experience of the technology.

- Off-the-shelf products and frameworks don't work as advertised.

- etc

The goal of risk-storming is to seek input from as many people as possible so that you can take advantage of the collective experience of the team. If you're planning on using a new technology, hopefully somebody on the team will identify that there is a risk associated with doing this. Also, one person might quantify the risk of using that new technology relatively highly, whereas others might not feel the same if they've used that same technology before. Asking people to identify risks individually allows everybody to contribute to the risk identification process, so that you'll gain a better view of the risks perceived by the team rather than only from those designing the software or leading the team.

3. Converge the risks on the diagrams

Next, ask everybody to place their sticky notes onto the architecture diagrams, sticking them in close proximity to the area where the risk has been identified. For example, if you identify a risk that one of your components will run too slowly, put the sticky note over the top of that component on the most appropriate architecture diagram.

This part of the technique is very visual and, once complete, lets you see at a glance where the highest areas of risk are. If several people have identified similar risks you'll get a clustering of sticky notes on top of the diagrams as people's ideas converge.

4. Review and summarise the risks

Finally, review and summarise the output, especially focussing on risks that only one person identified, or risks where multiple people disgree on the priority. The output should be a risk register, where all of the risks are logged with the agreed priority. If you decide that something turns out not to be a risk, keep a note of this too.

When to use risk-storming

Risk-storming is a quick technique that provides a collaborative way to identify and visualise risks. As an aside, this technique can be used for anything that you can visualise; from enterprise architectures through to business processes and workflows. It can be used at the start of a software development project (when you're coming up with the initial software architecture) or throughout, during iteration planning sessions or retrospectives. Just make sure that you keep a log of the risks that are identified, including those that you later agree have a probability or impact of "none".

Additionally, why not keep the architecture diagrams with the sticky notes on the wall of your project room so that everybody can see this additional layer of information. Identifying risks is essential in preventing project failure, and it doesn't need to be a chore if you get the whole team involved.

Mitigating risks

Identifying the risks associated with your software architecture is an essential exercise, but you also need to come up with mitigation strategies, either to prevent the risks from happening in the first place, or to take corrective action if the risk does occur. Since the risks are now prioritised, you can focus on the highest priority ones first. There are a number of mitigation strategies the are applicable depending upon the type of risk, including:

- Education: Training the team, restructuring it, or hiring new team members in areas where you lack experience (e.g. with new technology).

- Writing code: Creating prototypes, proofs of concept, concrete experiments, walking skeletons, etc where they are needed to mitigate technical risks by proving that something does or doesn't work. Since risk-storming is a visual technique, it lets you easily see the stripes through your software system that you should perhaps look at in more detail.

- Re-work: Changing your software architecture to remove or reduce the probability/impact of identified risks (e.g. removing single points of failure, adding a cache to protect from third-party system outages, etc). If you do decide to change your architecture, you should re-run the risk-storming exercise to check whether the change has had the desired effect, and not introduced other high priority risks.

Risk-storming and this website were created by Simon Brown

-

Risk-storming and this website were created by Simon Brown

-